What is a System?

The word system derived from the Greek word sunistanai (to combine) is usually defined as a group of combined elements. This definition identifies system/element relation with that of whole/part, but a system is not just a whole composed of parts, much less spatial subsumption relation between a whole and parts. What is a system, then?[1]

Fundamental Concepts of Systems

A system is something more than a mere congregation of elements and this “more" is selectivity. Systems select how to combine elements, while the selected combination is its structure. A system is nothing but selection.

Let’s take a system of odd numbers as an example. This system is usually considered a set whose elements are 1,3,5 and so on. But again a mere gathering of such elements is not a system. The system is the function that selects odd numbers and excludes even numbers within the horizon of numbers: S={x|x=2n+1 and n belongs to integer}.

Any number 2,3.5,4i etc is a candidate for odd numbers, but this system excludes such other possibilities. The excluded other possibilities constitute the environment of this system. The system of even numbers or irrational numbers belongs to this environment. Systems are functions to distinguish themselves from their environment. This is another formation of its definition, though not a good one, because definiens includes definiendum.

Here we list four concepts important to understand the theory of systems:

- Element (in our example, numbers)

- Structure (a combination of numbers; odd numbers)

- System (selecting odd numbers)

- Environment (excluded other possible combinations)

Let’s consider these four concepts in detail.

Elements and Complexity

In system theories, the number of possible ways to combine elements is called complexity. Complexity is a very misleading concept if you don’t make a distinction between compositeness and complexity as follows:

composite/single = whole/part

complex/simple = indeterminate/determinate

A composite system as a whole is composed of some parts and this plurality is compositeness, while the way of composition can be otherwise than as it is and this plurality in the possible world is complexity.[2] To understand this distinction, let’s consider the case of the word “book". The word is composed of four letters, so it has compositeness. As English has 26 alphabets, there are 264 possible ways to make four-letter words. If you select a combination “obko", it is a meaningless range of letters. If you select fuck, then it is the so-called four-letter word. You as an information system expose yourself to the indeterminate environment, to the danger of meaninglessness and incorrectness. Such indeterminacy is the complexity.

As complexity is a misleading term, I’d like to use a purely technical term “entropy" instead. Entropy is originally a thermodynamic term. So I’ll illustrate with a thermodynamic example what entropy is.

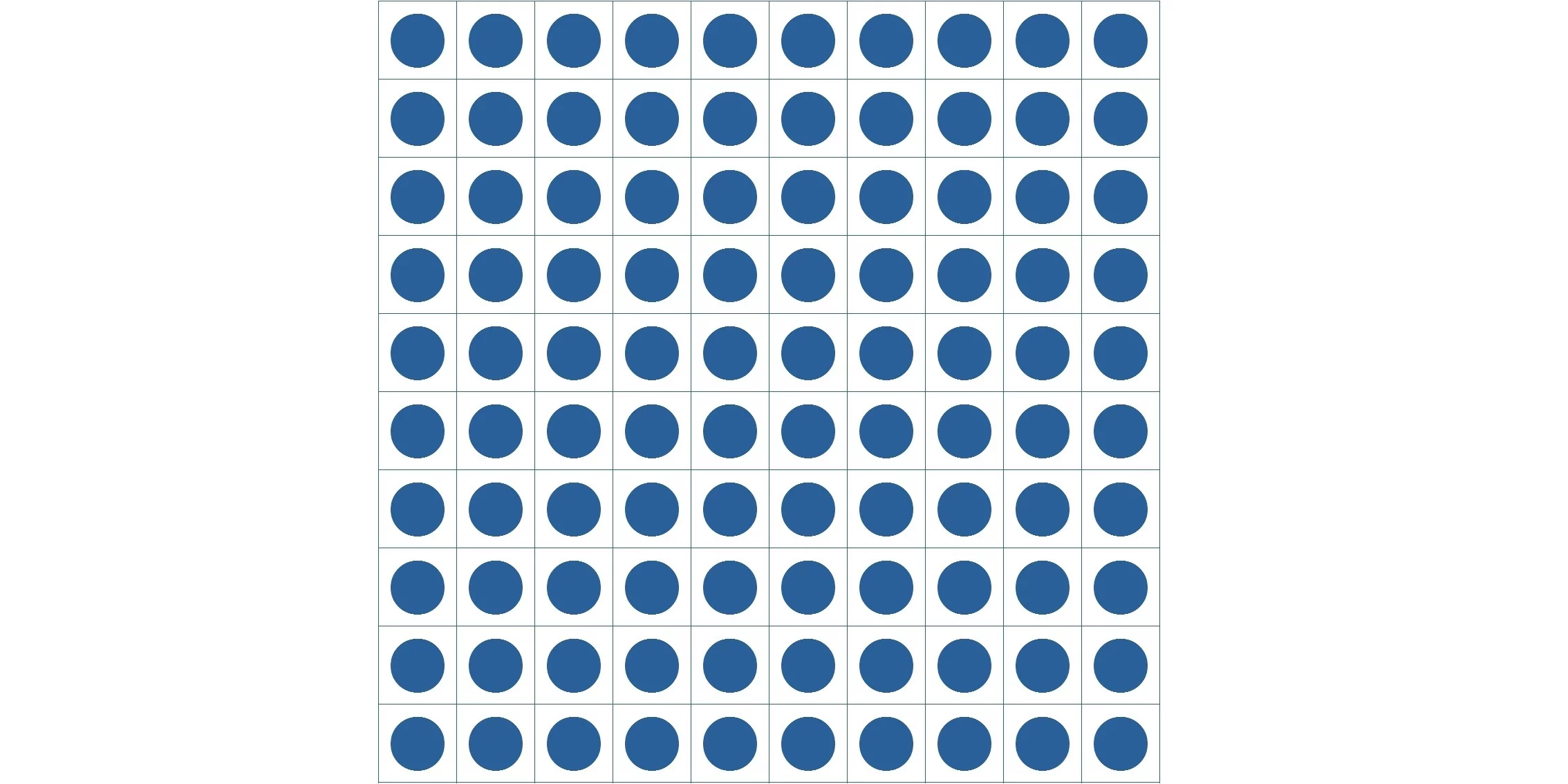

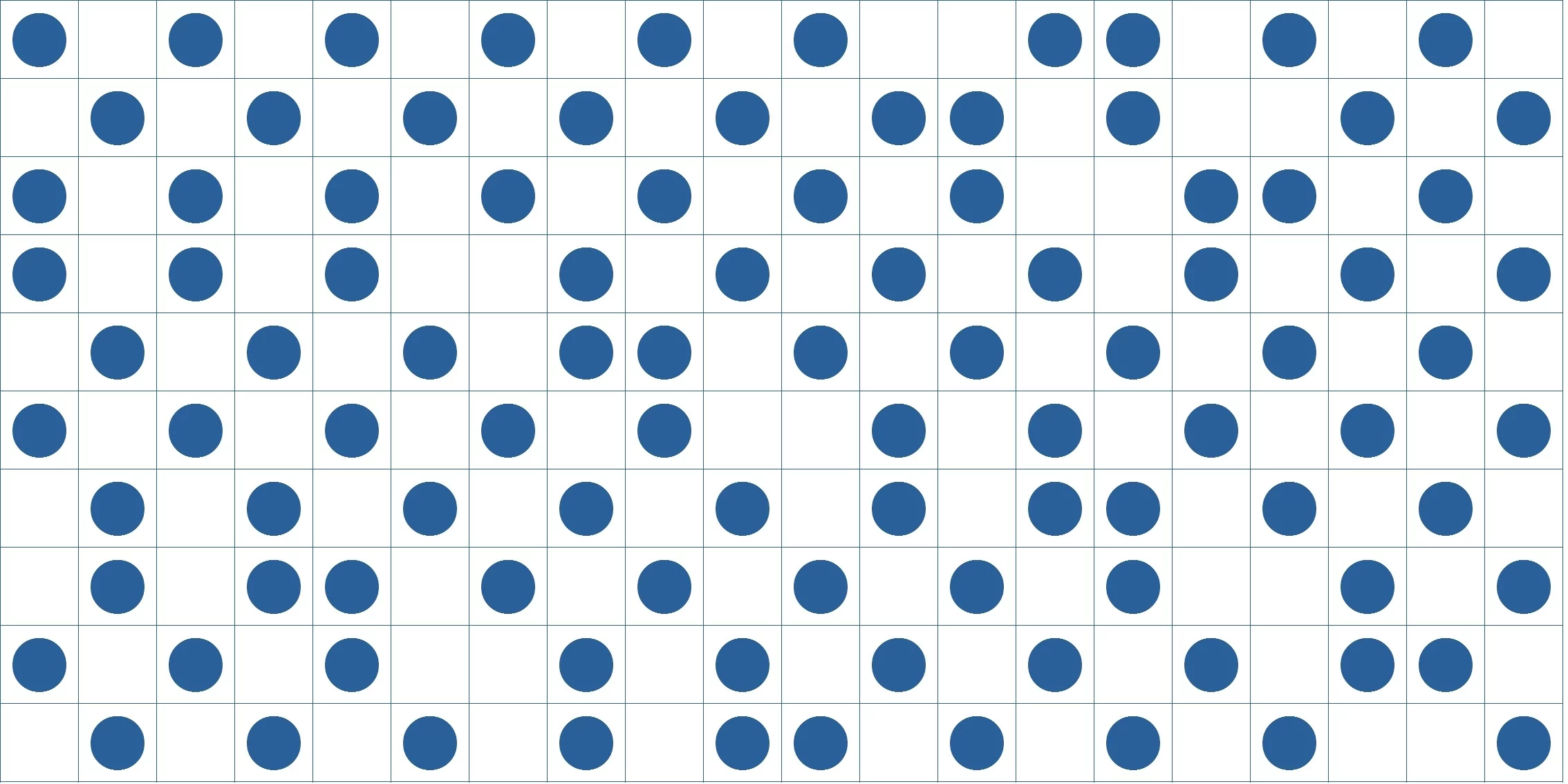

Suppose a certain volume of gas containing N molecules is heated and the volume has doubled with its pressure equal.

If the gas in the figure above is divided into N rooms, there is only one way to locate molecules. When the number of rooms doubles, as is depicted in the figure below, there can be 2Nways of arrangement. Now that entropy is a logarithm whose antilogarithm is complexity, that is to say, the reciprocal number of probability, entropy increases by kNln2 (about 0.7Nk) in this case.

In short, entropy is randomness, disorder, indeterminacy, and the state that can be otherwise than it is. In other words, entropy must decrease if some form or order is to appear. Erwin Schrödinger named the decrease in entropy negentropy[3].

Systems and Structure

Negentropy creates low entropy surrounded by a high entropy environment. Negentropy is the function of the system while low entropy is the property of structure. A structure itself is a system if it is considered to enable itself, but the system may also be enabled by a higher-order system.

The relation of a system to its structure or negentropy to low entropy is the same as that of the selecting to the selected or the founding to the founded, but it does not mean that systems are something different from their structures. It is important to notice that selecting systems and selected structures are interpenetrating. Those who select four-letter words are vulgar. That is to say, the system that selects the vulgar is itself vulgar. What a system selects determines what the system is. So, systems are inseparable from structure.

Another reason why it is infructuous to distinguish systems from structures is that the distinction is relative. It depends on a viewpoint whether something is a system or a structure. If you regard an iceberg as self-organizing, it is a system. If you attribute its existence to arctic conditions, the iceberg is a part of the structure (subsystem) of the whole earth system.

Are there any absolutely independent systems in the world? Probably not. Every system is in the network of the foundation. Therefore, the selected structure can practically be identified with the selecting system, though the conceptual distinction is possible and necessary at least for philosophy.

Systems and Environment

Lastly, I will examine the concept of the environment. System/environment relation is confused with that of inside/outside as often as system/element relation is confused with that of whole/part. Such a spatial conception is naive and sterile.

Now how can you define inside and outside? Inside/outside is no more an objective distinction than a temporal distinction between past/present/future. Can you make these distinctions without referring to your subject? You can describe the inside of a sphere mathematically namely without reference to your subject: x2+y2+z2<r2. But when you call that space inside, you are supposing, “If I were there, it would be inside for me”. Such supposition is possible because of the similarity of the sphere to your body. Inside/outside distinction is therefore based on your corporal scheme.

Our body is a system, because it distinguishes itself from the environment, as is evident from the immunity mechanism. Even in the case of the body system, the distinction of system/environment does not coincide with that of inside/outside completely. A cancer grows entropy inside the body that strives to survive, that is to say, reduce entropy against it. In this sense, cancer belongs to the environment of the body system, though it is located inside the body. As to non-material systems, the difference is clearer. A betrayer belongs to the environment of his social system, though he is an insider (otherwise he would be not a betrayer but an ordinary enemy).

System/environment relation is equal not to inside/outside but to negentropy/entropy relation. The outside of a system is spatial surroundings, while the environment is a logical space consisting of the other possible ways of systems. Though such possible systems might be found outside the system, the distinction is still valid and necessary.

References

- Ludwig von Bertalanffy. General System Theory: Foundations, Development, Applications. George Braziller Inc.; Illustrated edition (June 15, 2015).

- Erwin Schrödinger. What is Life? – With Mind and Matter and Autobiographical Sketches. Cambridge University Press; Reprint edition (March 26, 2012).

- Niklas Luhmann. Theory of Society. Stanford University Press; 1st edition (October 10, 2012).

- ↑This is an updated version of the original article, which is cached at “What is a System?.” 18 Feb 2006.

- ↑According to Sholom Glouberman and Brenda Zimmerman, complicated systems are deterministic, while complex systems are probabilistic. Cf. Glouberman, Sholom and Brenda Zimmerman. “Complicated and Complex Systems: What Would Successful Reform of Medicare Look Like?”. Public Works and Government Services, Canada. Accessed March 17, 2015. p. 10.

- ↑“Every process, event, happening -call it what you will; in a word, everything that is going on in Nature means an increase of the entropy of the part of the world where it is going on. Thus a living organism continually increases its entropy -or, as you may say, produces positive entropy -and thus tends to approach the dangerous state of maximum entropy, which is of death. It can only keep aloof from it, i.e. alive, by continually drawing from its environment negative entropy -which is something very positive as we shall immediately see. What an organism feeds upon is negative entropy.” ― Erwin Schrödinger. What is Life? – With Mind and Matter and Autobiographical Sketches. Cambridge University Press. 1944. Chapter. 6.

Discussion

New Comments

I agree, but have a suggestion: If I understand where you are going, you are saying that the human condition is based upon systems or selection sets. Human systems typically resist entropy in ways that are not only organized and rule following, but also chaotic and unpredictable because the selection rules implicit in human systems do not predict the outcome, so the old mechanistic determinism is an inadequate model of the human condition.

You say: “A system is nothing but selection.” While this is true of all systems, living systems, especially human social systems have very different selection rules from systems that are not maintained by life forms.

If you want to jump from systems as selection rules to avoid entropy to how to better understand the human condition; you might want to check out some unique properties of human systems.

Future generations who advance the development of your paradigm might benefit from Talcott Parson’s idea that every human or organic system is a solution to four functional problems: Resources, goals, integration and the latency function which combines pattern maintenance and tension management. For the social system of a society, solving the problem of resources is function of the economic system, where money is the medium of exchange. The problem of national goals is the function of the political system, where power is the medium of exchange. The problem of Integration is the function of the legal system. Pattern maintenance and tension management are dealt with by various socializing institutions like the family.

A theory which transcends functionalist theory (for example Parsons or Malinowski) and conflict theory (for example Machiavelli or Marx) may help us model the interplay between the order of system theory and the organized disorder of chaos theory.

I do not believe in old mechanistic determinism. As stated in the following article, not only human social systems but also natural material systems can be chaotic and unpredictable though they follow some determinate rules.

You said, “A theory which transcends functionalist theory (for example Parsons or Malinowski) and conflict theory (for example Machiavelli or Marx) may help us model the interplay between the order of system theory and the organized disorder of chaos theory.”

Yes, that’s the theme of the theory of complex systems. The name of complex systems indicates it: complexity means indeterminacy and system means determinacy.

Although system reduces entropy, reducing entropy just results in increase in entropy in the environments. In other words, indeterminacy must increase in order to maintain systems. This paradox is explained by means of the second law of thermodynamics and I applied it to information or social systems.

You mentioned Parson’s AGIL scheme. I agree with you in that to work out the theory of social systems it is necessary to study the role of communication media such as money that reduce double-contingency of social relations.